Deep Non-Line-of-Sight Reconstruction

Javier Grau Chopite, Matthias B. Hullin, Michael Wand, Julian Iseringhausen

Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

Abstract

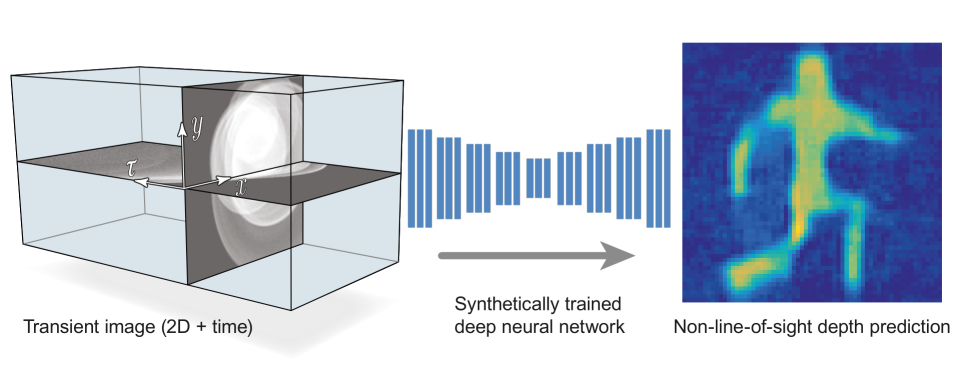

The recent years have seen a surge of interest in methods for imaging beyond the direct line of sight. The most prominent techniques rely on time-resolved optical impulse responses, obtained by illuminating a diffuse wall with an ultrashort light pulse and observing multi-bounce indirect reflections with an ultrafast time-resolved imager. Reconstruction of geometry from such data, however, is a complex non-linear inverse problem that comes with substantial computational demands. In this paper, we employ convolutional feed-forward networks for solving the reconstruction problem efficiently while maintaining good reconstruction quality. Specifically, we devise a tailored autoencoder architecture, trained end-to-end, that maps transient images directly to a depth map representation. Training is done using an efficient transient renderer for diffuse three-bounce indirect light transport that enables the quick generation of large amounts of training data for the network. We examine the performance of our method on a variety of synthetic and experimental datasets and its dependency on the choice of training data and augmentation strategies, as well as architectural features. We demonstrate that our feed-forward network, even though it is trained solely on synthetic data, generalizes to measured data from SPAD sensors and is able to obtain results that are competitive with model-based reconstruction methods.Files

- Full Paper (PDF): GrauEtAl-DeepNLoS-CVPR2020.pdf

- Supplemental Document (PDF): GrauEtAl-DeepNLoS-CVPR2020-supp.pdf

- Supplemental Dataset(s): GrauEtAl-DeepNLoS-CVPR2020-suppData.zip

BibTeX Citation

@InProceedings{GrauCVPR2020,

author = {Javier {Grau Chopite} and Matthias B. Hullin and Michael Wand and Julian Iseringhausen},

title = {Deep Non-Line-of-Sight Reconstruction},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2020},

month = {June},

}